For a while I’ve been seeing a lot of great things about GPT3, and it’s created a small fire of interest in my head. I’ve followed some of the work behind PromptBase and most recently Nat Friedman’s tweet about how he was using GPT3 to control a web browser. At this point, it finally clicked for me, an interesting idea to play and learn more about GPT3 with. Using it to control Home Assistant!

Signing up

To try GPT-3 for free you’ll need a few things, a valid email and phone number which can receive SMS messages. (There are some geo restrictions to be aware of, you can check if your country is on this list)

- Create an account at https://beta.openai.com/signup/

- You’ll get a confirmation email to verify your account (click the link in the email)

- You’ll need to provide a real phone number to get a confirmation text.

- Enter the code that you were sent

- You’ll get some credit for a new account ($18 i think). Each query uses ‘tokens’ and equates to a cent or so for each execution. Pretty generous and lots of room to experiment and explore the product.

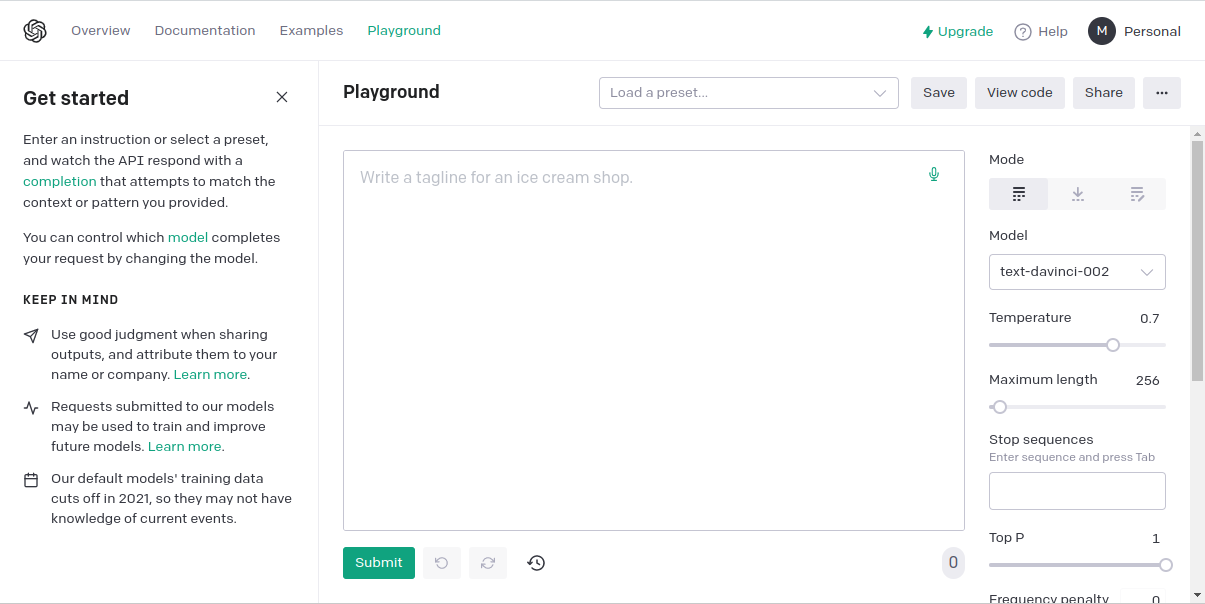

Using the playground

The playground is the main area where you experiment and build your prompts for use in your applications, allowing you to rapidly iterate your prompts.

Building my first prompt

Prompt engineering from what I’ve found, seems to be a dark and unexplained art, but judging from the hiring section on PromptBase can be quite well paid. There are some examples that people have shared on twitter, some collections on GitHub but I largely have explored this through just playing with prompts and tweaking to find out what has worked.

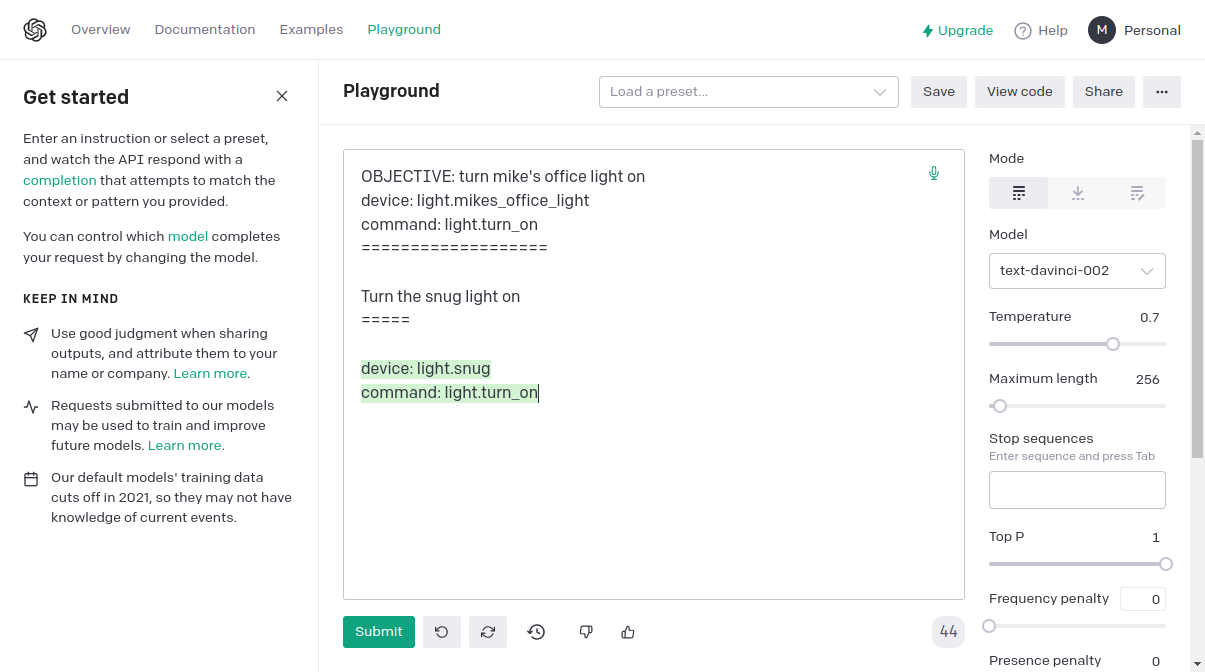

I started with a simple prompt, with fairly low expectaions if I’m honest. What I want to be able to do is pass in unstructured conversational text and get back the HomeAssistant service and the entity_id (reasonably inferred from the text, my Home Assistant entity names are fairly sane)

OBJECTIVE: turn mike's office light on

device: light.mikes_office_light

command: light.turn_on

===================

When I pass in the text turn the snug light on it responded with the device and command. At this point, I’m pretty blown away and can see what a lot of the excitement is about with GPT3!

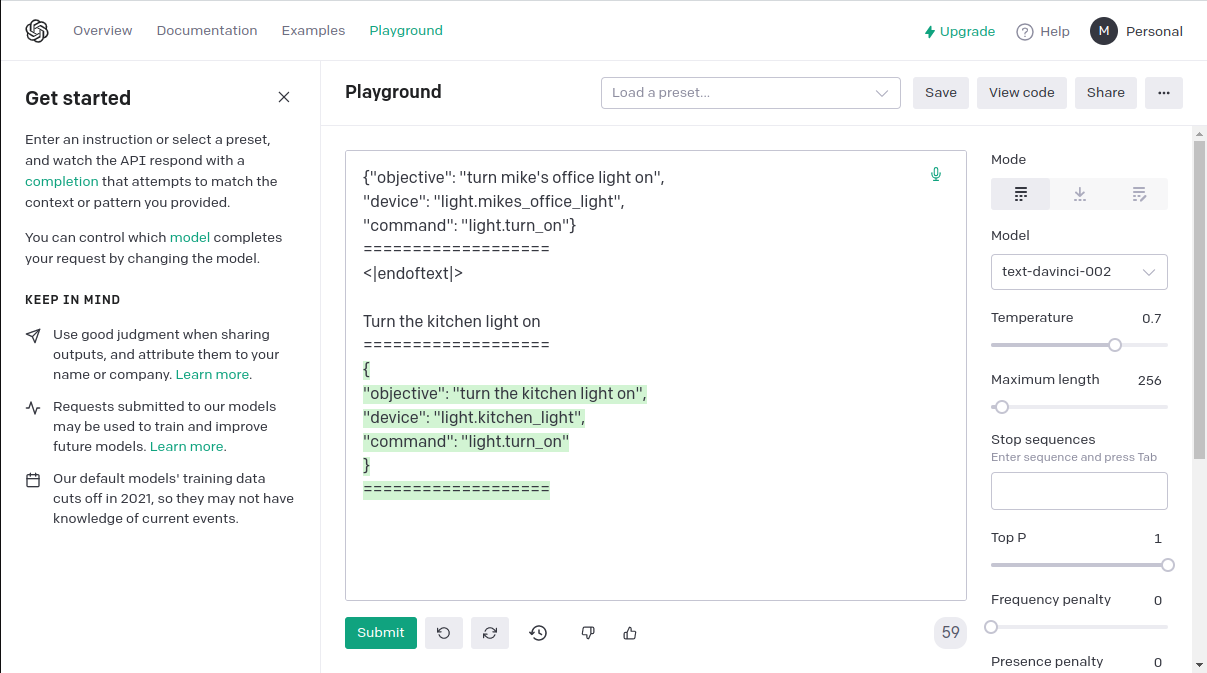

Building up the prompt

I quickly realised that my first prompt (whilst working) wasn’t going to be enough. I would have to parse the response, build a bunch of code. More effort than I wanted to invest in this. So, can GPT-3 give me back the JSON that I wanted? Absolutely it can!

{

"objective": "turn mike's office light on",

"device": "light.mikes_office_light",

"command": "light.turn_on"

}

===================

I would need to test how far this prompt will get me, and teach it about other device and service types. But for a Sunday afternoon project, I’m happy to leave it at this.

Building the app

Given GPT-3 is doing all of the heavy lifting for me, it’s possible to use this in a variety of scenarios. It can be hooked up to a telegram bot, Rhasspy or an Alexa skill for example. For now, whilst I’m just using this as a learning opportunity, I’ve built it to accept a simple command line input.

The easiest part of this whole process was actually in the integration with Home Assistant, their API (documentation can be found here) made it very easy. If you’ve been paying attention you’ll recognise some of what that prompt from earlier was trying to achieve. The commands and entity_id are exactly how HomeAssistant expects the data to send via the API.

After some processing of the text, and parsing into JSON. We can just use the data we get back from GPT-3 and send it to Home Assistant.

Future

Obviously this is just a very simple implementation, if I get some more time in the future it would be interesting to see how easy it would be to build up more control of more devices without having to write a huge prompt e.g. plugs, thermostats for heating etc.

Code

You can grab the simple command line app that I built from my GitHub.

Comments